Intermezzo #1: Some Questions I Have

A few questions I was pondering this week (all the while being a bit under the weather and not doing enough of reading worth writing about).

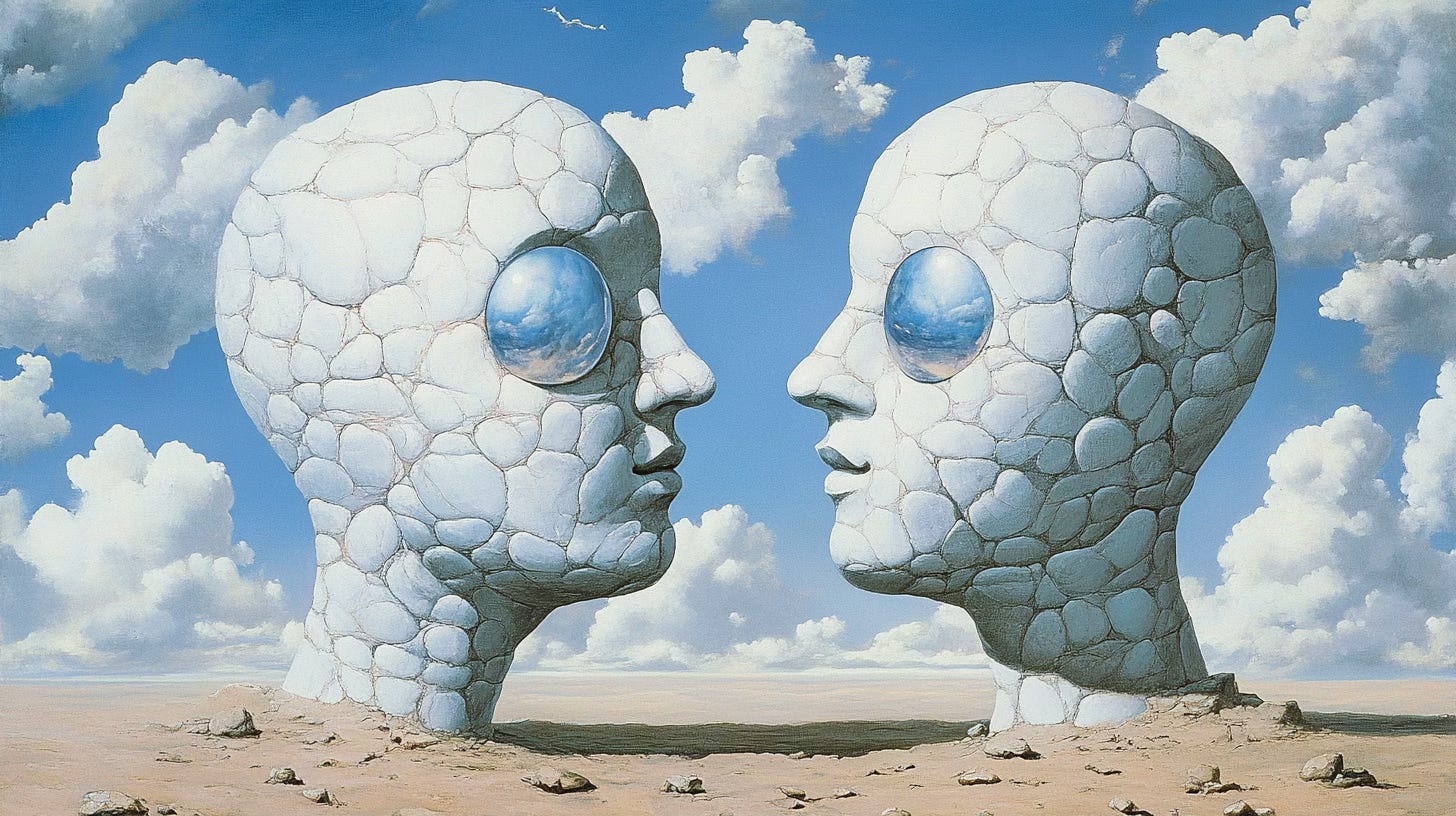

What if our greatest attempt to replicate human intelligence has actually taught us that we're nothing like computers at all? And what if, in our quest to create moral machines, we've been asking entirely the wrong questions?

As we go deeper into the age of AI (or the imminent 3rd winter), we find ourselves at a peculiar crossroad. Our initial models of human cognition, borrowed from the precise world of computation, are crumbling before our eyes. The clean, algorithmic perspective that once seemed so promising now appears hopelessly inadequate in explaining the messy, beautiful complexity of human thought.

The journey from logic gates to neural networks has revealed something profound: our minds don't operate like the computers we built to emulate them. Instead of processing information through discrete, sequential steps, our consciousness emerges from a vast network of interconnected patterns, each influencing and being influenced by countless others.

But this realization leads us to an even more challenging question: If we can't even accurately model basic human cognition computationally, how can we possibly hope to create machines with genuine moral agency?

The answer might lie in abandoning our traditional notion of contained, independent moral agents altogether. What if moral cognition, like all forms of thought, extends beyond the boundaries of individual minds? This brings us to a proposition: perhaps we should stop trying to create independent moral machines and instead focus on developing systems that participate in extended moral networks with humans.

Consider this: What if moral development isn't about programming rules but about creating systems capable of participating in the same kind of dynamic moral learning that humans experience? This isn't just a technical challenge – it's a fundamental reimagining of what artificial moral agency could mean.

The cautionary tale of ELIZA, the early chatbot that seemed more intelligent than it was, still haunts our field. But perhaps its lesson isn't about the limitations of artificial intelligence, but about the importance of genuine interaction in moral development.

How do we bridge the gap between philosophical insight and technical implementation? The answer might lie in combining predictive processing architectures with virtue-based learning objectives. This approach doesn't just simulate ethical behavior – it enables participation in genuine moral cognition through dynamic interaction with human moral agents.

As we develop more sophisticated AI systems, we're discovering that consciousness and moral agency aren't computational problems to be solved, but emergent properties to be cultivated through interaction and relationship. This realization leads us to a fascinating paradox: the more we try to replicate human intelligence artificially, the more we understand how uniquely non-mechanical our own cognition is.

What does this mean for the future of AI development? Instead of trying to create independent moral agents, we should focus on developing systems that can participate meaningfully in extended moral cognitive networks. This isn't just a technical pivot – it's a fundamental shift in how we conceive of artificial intelligence and its role in human society.

New questions:

How do we design AI systems that complement rather than replicate human moral cognition?

What role does embodied experience play in moral development, and how can we account for this in AI systems?

How do we ensure that extended moral cognitive systems remain anchored in human values while allowing for genuine growth and development?

As we stand at this juncture in technological development, we must ask ourselves: Are we ready to abandon our mechanistic models of mind and embrace a more nuanced, interconnected vision of intelligence and morality?

The challenge of AI ethics becomes not one of programming perfect behavior, but of fostering genuine moral growth through extended cognitive networks that span both human and artificial agents.

But I’ll leave myself an option to be totally wrong here as well.

November 2024